High Availability for SARK

Contents

History

SARKHA was originally developed for a customer in South Africa and it has since proved very popular with users who place a high value on the availability of their PBX. The goal was, and is, to provide an Asterisk-based PBX with very near 100% availability. SARK HA gets pretty close to this goal. As part of the V3 development sarkha was augmented by the addition of a new general purpose module called asha (Asterisk High Availability) which among other things is more tune-able than the original and uses rsync over SSH instead of the original method which used SMB shares.

How it works

sarkha works by using the proven standard Linux HA cluster technology to run two Asterisk servers side-by-side; one active, or primary, server and one passive, or standby, server. In the event of a failure of either Asterisk or Linux on the active server, the standby server will automatically assume control of all the resources and continue the service until such time as the primary is once again ready to assume responsibility. The time it takes to fail-over can vary depending upon how you set the Heartbeat parameters but it is quite common to have large systems (250->300 phones) able to fail over in under 30 seconds. That is the time taken from a hard down on the primary to being able to place outbound calls on the standby.

There are three components to the solution,-

- The regular Linux Heartbeat modules, which you can install with rpm or yum and can usually be found in the extras repo of the CentOS 5 and SME 8.0 distribution libraries.

- The asha rpm which manages the synchronisation of data between nodes and adds some ancillary functions such as the Asterisk watchdog daemon.

- The sarkha V3 environment rpms which manage the SARK specific integration with asha.

Contact between nodes

sarkha sets the heartbeat up to support inter-node comms via both serial (if present) and network pathways. Under normal circumstances, the serial port connection is recommended because it cannot be interrupted by a network failure, If the units are too far apart to support serial connection or if there is some other reason why you are unable to use serial then you should consider setting a longer failover delay in ha.cf (the heartbeat config file) to compensate for network transients. To do this for a SARK installation you will need to modify the setting in the file /opt/sark/generator/sarkha.php. For a full discussion of the ha.cf file and its settings please see the ha website

types of failover

sarkha recognises 2 types of failover;

- Hard failover; where the primary machine physically fails, either as a result of a component failure or a power drop. In any event, the machine simply ceases to function and contact is lost between the heartbeat nodes.

- Soft failover; where the Asterisk software on the primary machine fails (i.e. is no longer present in memory). When this happens, a watchdog task senses that Asterisk has gone and physically invokes a failover to the standby node.

Limitations

There is one instance which the failover will not currently catch. This occurs when Asterisk, for whatever reason, goes into a "hang" state. We have seen this happen in the past as a result of the system running out of memory. Asterisk is still present in memory but it isn't any longer capable of doing useful work. The watchdog daemon does not question whether Asterisk is active or not, it simply looks to see if it is present in memory.

Installation

Installation is straightforward in an EL5 environment (RHEL5, C5 or SME8.0). Heartbeat can be installed directly from the yum repos and the other modules can be fetched from the download site.

Here is a regular EL5 install

yum install heartbeat --enablerepo=* rpm -Uvh asha-1.0.0-2.noarch.rpm rpm -Uvh el5sarkha-3.1.0-2.noarch.rpm reboot

Here is an smeserver 8 install

yum install heartbeat --enablerepo=* rpm -Uvh asha-1.0.0-2.noarch.rpm rpm -Uvh smesarkha-3.1.0-2.noarch.rpm signal-event post-upgrade signal-event reboot

asha's sync component uses rsync over ssh so you will need to generate a "no passphrase" public/private key pair on the standby server using ssh-keygen...

ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: a7:5b:00:4d:02:20:79:7a:4c:d8:ff:5c:7a:28:e3:d0 root@mystandby.mydomain.com

Now you will need to create the .ssh directory on your primary server (if it doesn't already exist) and copy the key across...

ssh root@myprimary.mydomain.com "mkdir .ssh; chmod 0700 .ssh" scp /root/.ssh/id_rsa.pub root@myprimary.mydomain.com:.ssh/authorized_keys2

Configuration

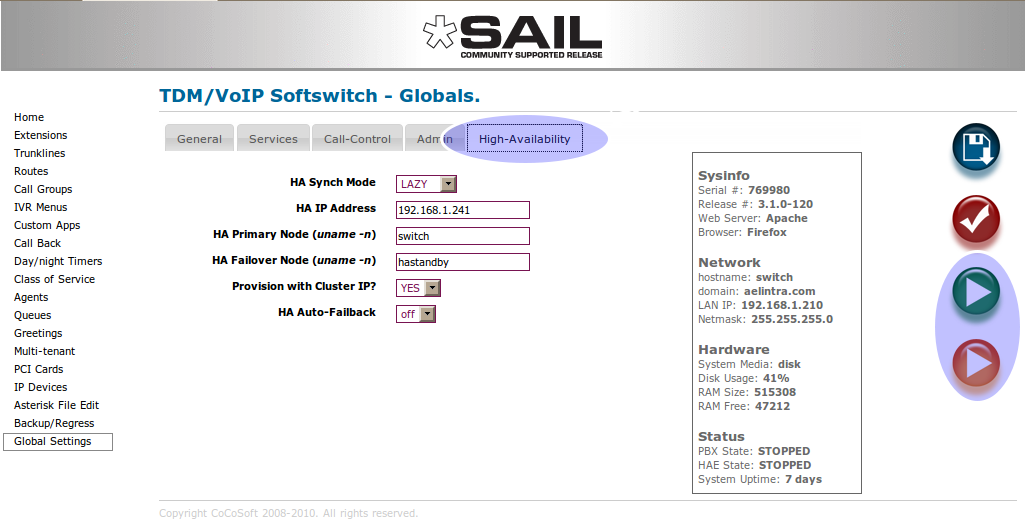

Once installed, you will find some new options and buttons on the SARK global settings panel.

As you can see, there is a new tab "High Availability" and an additional Start/Stop button. The extra start stop is to control the high-availability engine (heartbeat). You can decide whether to enable it or not from Globals. You also have some new variables to fill out beforte you can start your HA image. Do be aware that you will need to fill this information out (at least initially) on BOTH systems (primary and standby).

- HA Synch Mode (LAZY|LOOSE)

Dictates whether or not automatic synchronisation will occur between the two nodes. LAZY means that it will sync, LOOSE means nosync.

- HA IP Address

The Virtual IP address used by Heartbeat to hand control back and forth between the nodes. This can be any free static IP address on your subnet.

- HA Primary Node (uname -n)

Heartbeat uses node names to find its partners. The primary node is the name given by uname -n on the primary node.

- HA Failover Node (uname -n)

Heartbeat uses node names to find its partners. The primary node is the name given by uname -n on the standby node.

- Provision with Cluster IP? (YES/NO)

This tells the provisioning subsystem whether or not it should provision the phones to register to this server's "real" IP address or to the cluster's virtual IP address. It is usual to set this to YES when the cluster is active.

- HA Auto-Failback? (off/on)

This tells the HA component whether or not to automatically fail back to the primary after an initial failover to the standby. Most users run with this off.

Initial Start Up

The initial system state should be with asterisk and the HA engine turned off on BOTH nodes. The sequence is as follows...

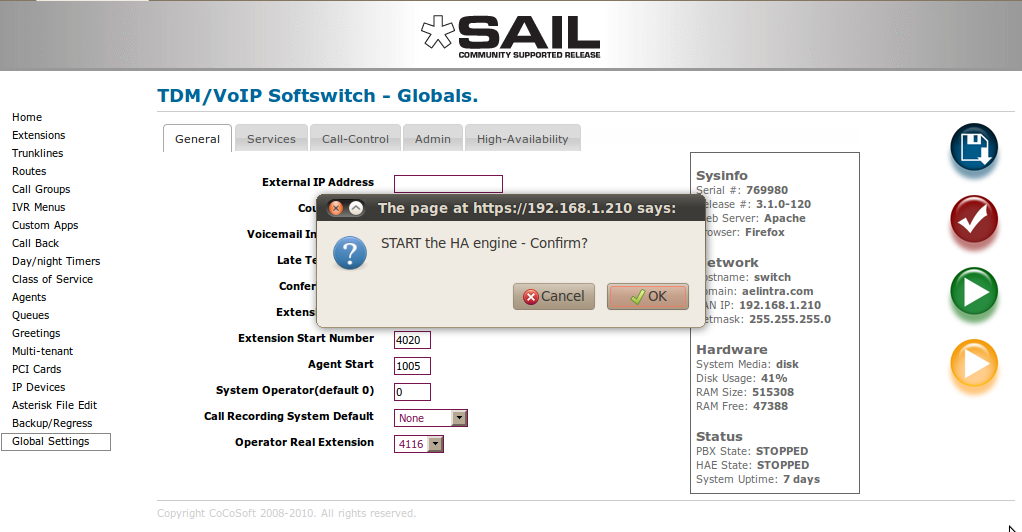

- Start the HA engine on the primary node by clicking the HA engine start button ONLY.

Press confirm and you should see this screen....

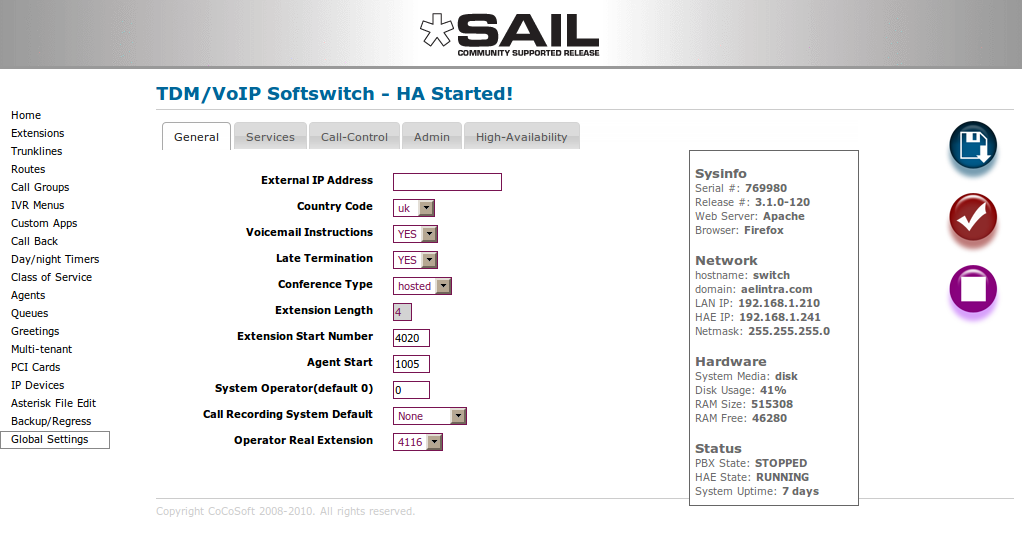

Notice the start buttons have disappeared and all you now have is a stop button for HA. This is because the HA engine is waiting for the standby node to come online. If the standby node does not come on-line within 120 seconds, the primary will assume it isn't coming up and it will go ahead and start Asterisk anyway. If the standby node does come up then all is good and the primary will start Asterisk. You will then see this screen on the primary...

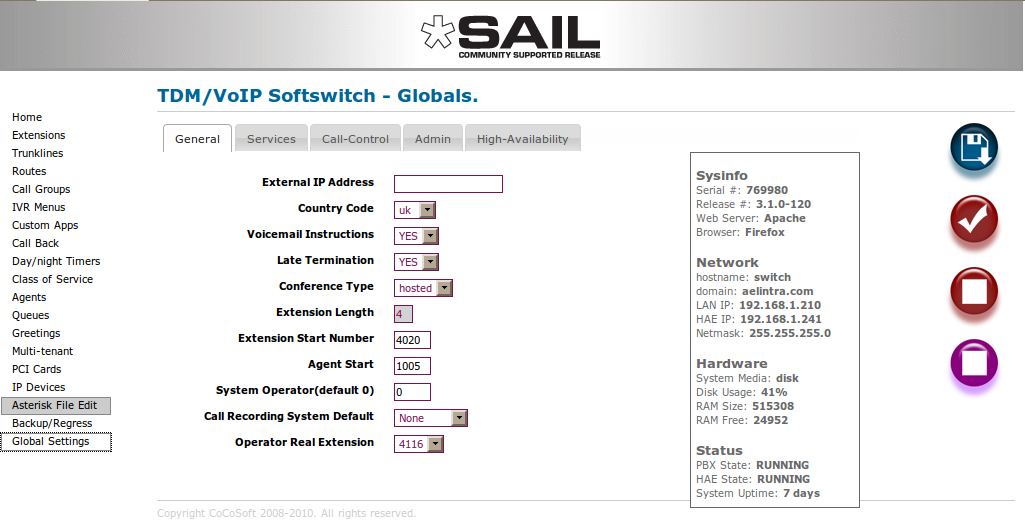

Notice that you now have the option to stop either the HA engine or Asterisk on the primary server (two STOP buttons). If you stop Asterisk then the system will fail-over to the standby. This is a manual fail-over. It uses the same logic path an automatic fail-over takes in the event of an Asterisk crash. A watchdog daemon looks for the Asterisk running task every few seconds. If it doesn't find it, it invokes a fail-over. It doesn't actually matter if Asterisk failed or it was manually stopped, the process is the same.

Finally, once HA is up and Asterisk is running normally on the primary, if you look at globals panel on the standby node you will see that there is no longer a start/stop button for Asterisk. This is to prevent accidentally starting Asterisk on the standby while it is also running on the primary.

Synchronisation

By default, when the system HASYNC parameter is set to LAZY, the sync task will run every minute on the standby node and use rsync to move interesting data from the primary node to the standby node. This ensures that the standby node is always within a minute at most of being in sync with the primary. The only exception to this rule is if Asterisk is running on the standby node. Asterisk should only ever run on the standby if the primary has failed, so if the sync task finds Asterisk running it will exit without attempting synchronisation. The files and directories which by default are synchronised are as follows

- The SARK SQLite database

- /var/lib/asterisk/sounds

- /etc/asterisk

- /var/spool/asterisk/voicemail

You may wonder why /var/lib/asterisk/sounds is included; this is because SARK stores its "greeting" sound files into this directory.

Advanced

The asha component uses a regular SysV .conf file; /etc/asha.conf. You can modify elements of this file to change some of the behaviours of sarkha. Here is the complete file

# # asha config file # # # If you are running SARK HA then DO NOT modify HACLUSTERIP, HAPRINODE, HASECNODE # or HASYNCH. They will automatically be updated by SARK # # virtual IP of the HA cluster HACLUSTERIP= # primary node name HAPRINODE=haprimary # secondary node name HASECNODE=hastandby # sync mode (LAZY or LOOSE); # basically, LAZY means ON and LOOSE means OFF HASYNCH=LAZY # ssh port on the primary HASSHPORT=22 # asterisk conf file directory ASTERISK=/etc/asterisk # database file (N.B. this is a FILE not a directory) DB=/opt/sark/db/sark.db # your greeting file directory GREETINGS=/var/lib/asterisk/sounds # user sync nodes - specify any other directories you want to sync OTHER1= OTHER2= OTHER3= # post rsync job to run POSTSYNC=/opt/sark/scripts/srkgenAst # attempt restart if no failover running? (YES/NO) - default is NO. # Do NOT set this to YES unless you absolutely know what you are doing. RESTART=NO # rhino failover card installed? (YES/NO) RHINOSPF=NO # rsync program location RSYNC=/usr/bin/rsync # dahdi directory (usually /etc/dahdi) - only include if you want it sync'ed TELEPHONY= # voicemail directory VOICEMAIL=/var/spool/asterisk/voicemail

Rhino 1SPF card & T1/E1 PRI lines

SARK-HA has full on-board support for the Rhino single port failover card. The card allows ISDN PRI circuits to be shared between HA cluster nodes. Access to the ISDN circuit is passed back and forth between nodes during failover and failback. In keeping with the rest of SARK, operation of the card is entirely automatic. All the user need do is to inform SARK that the card is present in the configuration and cable out the card correctly.

Card Installation

The software drivers for the card are shipped with the sarkha rpm. However, we need to make a small modification to /etc/ld.so.conf to reflect the load directory... Make the following changes on BOTH machines (for pre-ordered HA systems this will normally have been done at the factory during system assembly, however if you are upgrading an existing system then you may have to perform this step)...

[root@hasalpha ~]# cat /etc/ld.so.conf include ld.so.conf.d/*.conf /usr/local/bglibs/lib /usr/local/lib

Add the last line (/usr/local/lib) to etc/ld.so.conf and run ldconfig.

Card Cable-out

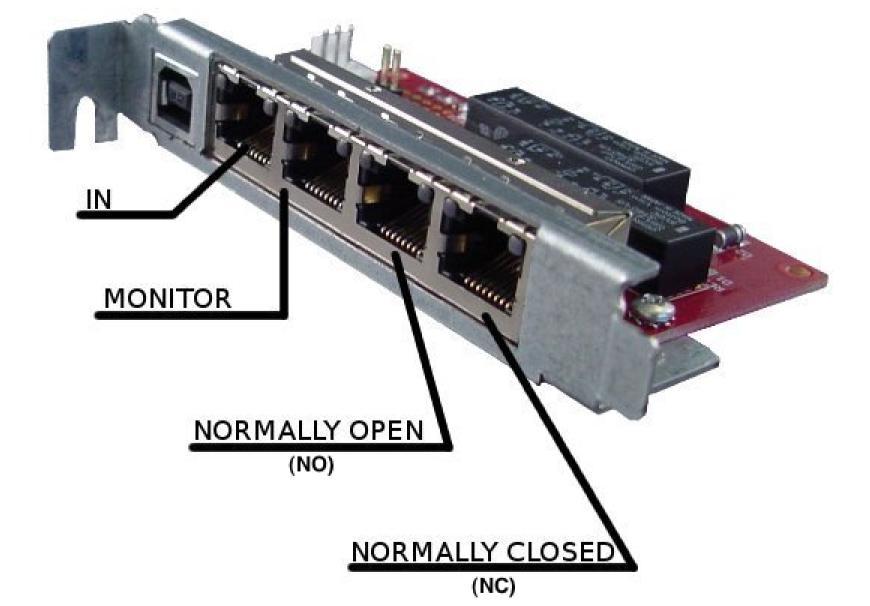

Cable-out the card as follows... With the card's USB port on the left and proceeding left to right...

- 1. Connect Socket 1 (marked "IN" on the diagram) to the PTT NTE.

- 2. Connect Socket 3 (marked "NO" on the diagram) to the T1/E1 card in the PRIMARY node.

- 3. Connect Socket 4 (marked "NC" on the diagram) to the T1/E1 card in the STANDBY node.

Operating Sequence - catastrophic failure (Hard failover)

With power OFF, the card will bridge IN and NC. With power ON, the card will bridge IN and NO. In this way, the system will feed ISDN30 signal to the PRIMARY node when power is on and to the secondary node when power is off. Thus if the PRIMARY node fails (loses power) then the ISDN30 signal will be transferred automatically to the STANDBY node. For this reason it is vitally important that the card is powered from the PRIMARY node.

Operating Sequence - Asterisk failure (Soft failover)

A watchdog daemon runs on both the PRIMARY and STANDBY nodes. Should Asterisk fail (upon whichever node it is currently running), then the daemon will "force" a failover event. It will also send the necessary commands to the Rhino card to failover the ISDN30 signal.